Here well will build Fashion MNIST ANN Classification Model that is basically image classification task.

Image Classification with ANN Using Fashion MNIST Dataset

Objective

To construct an artificial neural network that can categorize pictures of clothing—such as t-shirts, pants, and shoes—into pre-established groups.

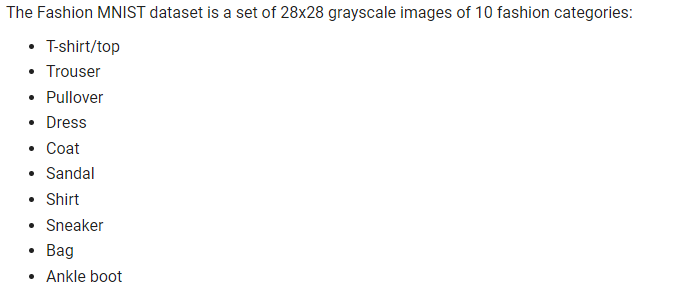

Dataset Info:

Import the required Libraries

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from sklearn.metrics import classification_report, confusion_matrix

Load and Preprocess the dataset

fashion_mnist_dataset = tf.keras.datasets.fashion_mnist

(X_train, y_train), (X_test, y_test) = fashion_mnist_dataset.load_data()Normalize the pixel values (Images) to be between 0 and 1

X_train = X_train / 255.0

X_test = X_test / 255.0Exploratory Data Analysis

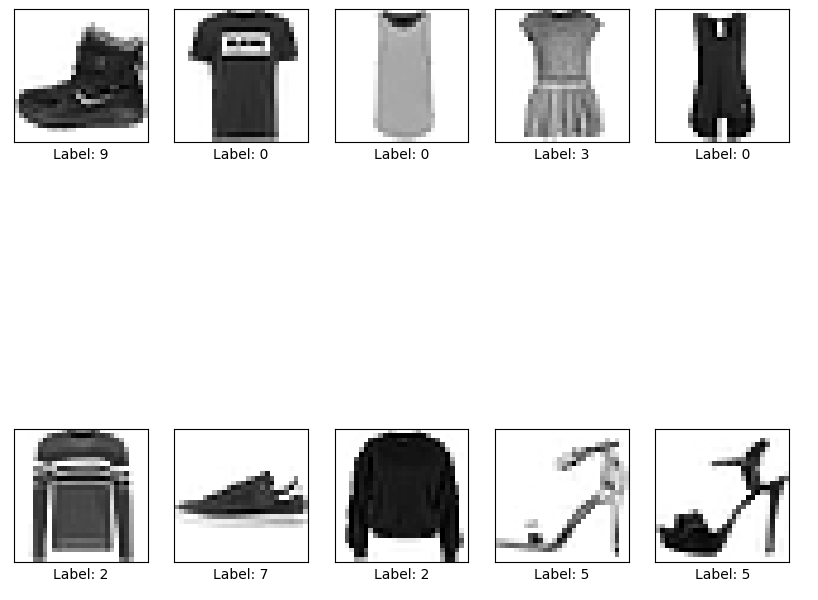

Plot the first 10 images in the training dataset

# Plot the first 10 images in the training dataset

plt.figure(figsize=(10,10))

for i in range(10):

plt.subplot(2, 5, i+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(X_train[i], cmap=plt.cm.binary)

plt.xlabel(f'Label: {y_train[i]}')

plt.show()

Each image corresponds to a clothing category with a label (0–9), which we will map as follows:

- 0 = T-shirt/top

- 1 = Trouser

- 2 = Pullover

- 3 = Dress

- 4 = Coat

- 5 = Sandal

- 6 = Shirt

- 7 = Sneaker

- 8 = Bag

- 9 = Ankle boot

Distribution of Classes

# Distribution of the labels in the training dataset

unique, counts = np.unique(y_train, return_counts=True)

class_distribution = dict(zip(unique, counts))

print("Class Distribution: ", class_distribution)Class Distribution: {0: 6000, 1: 6000, 2: 6000, 3: 6000, 4: 6000, 5: 6000, 6: 6000, 7: 6000, 8: 6000, 9: 6000}

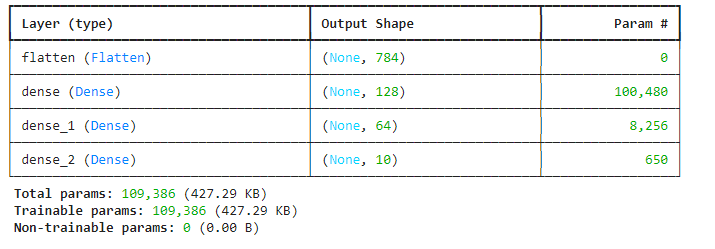

Build the Artificial Neural Network (ANN) model

# Initialize the model

model = Sequential()

# Flatten the 28x28 images into a 1D array (28*28 = 784)

model.add(Flatten(input_shape=(28, 28)))

# Add hidden layers with ReLU activation

model.add(Dense(units=128, activation='relu'))

model.add(Dense(units=64, activation='relu'))

# Output layer with 10 units for the 10 classes, using softmax activation

model.add(Dense(units=10, activation='softmax'))

# Compile the model

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Model summary

model.summary()

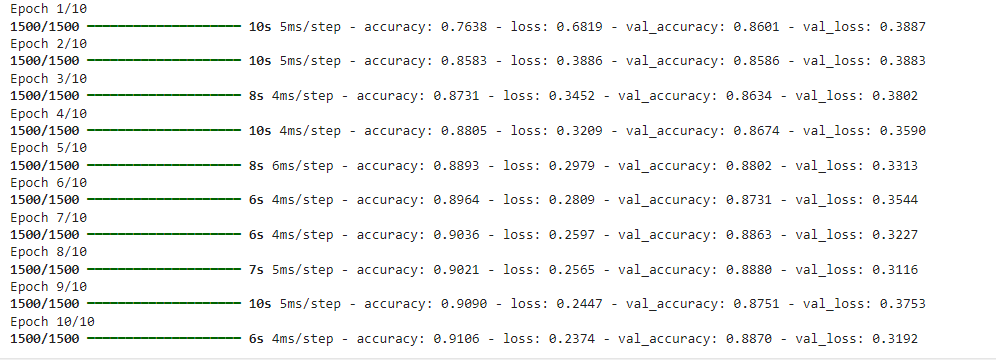

Model training

# Train the model

history = model.fit(X_train, y_train, validation_split=0.2, epochs=10,

batch_size=32)

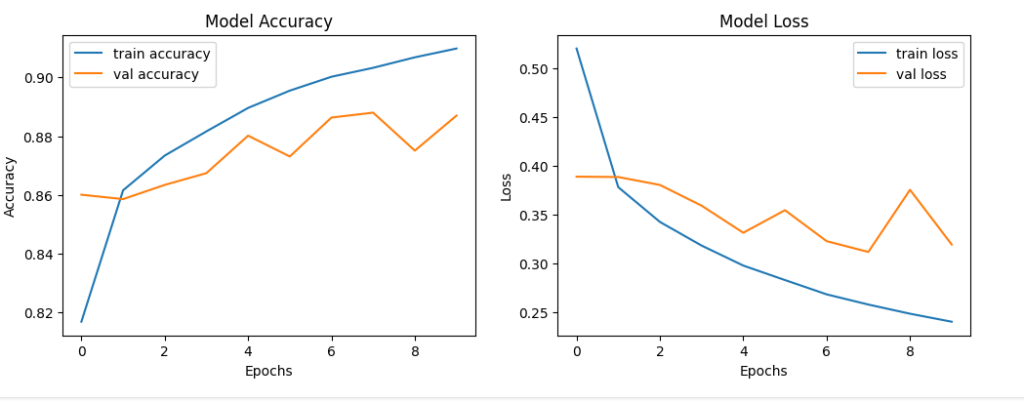

Model Evaluation

# Plot accuracy and loss over epochs

plt.figure(figsize=(12, 4))

# Accuracy plot

plt.subplot(1, 2, 1)

plt.plot(history.history['accuracy'], label='train accuracy')

plt.plot(history.history['val_accuracy'], label='val accuracy')

plt.title('Model Accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

# Loss plot

plt.subplot(1, 2, 2)

plt.plot(history.history['loss'], label='train loss')

plt.plot(history.history['val_loss'], label='val loss')

plt.title('Model Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

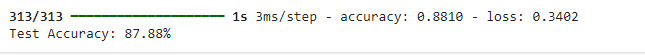

Check model performance on Test data

# Evaluate the model on the test data

test_loss, test_accuracy = model.evaluate(X_test, y_test)

print(f"Test Accuracy: {test_accuracy * 100:.2f}%")

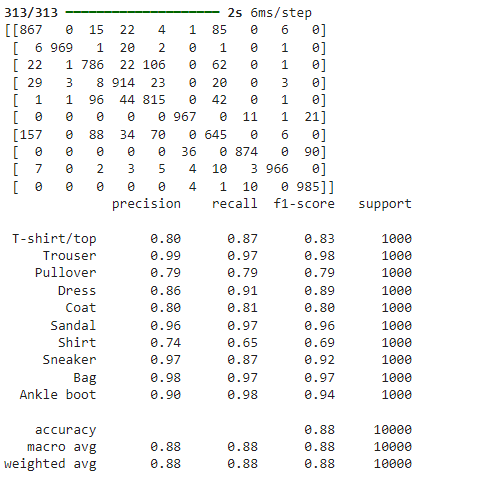

Confusion Matrix and Classification Report

# Predict the labels for the test set

y_pred = np.argmax(model.predict(X_test), axis=1)

# Confusion matrix

conf_matrix = confusion_matrix(y_test, y_pred)

print(conf_matrix)

# Classification report

print(classification_report(y_test, y_pred, target_names=[

'T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']))

Save the Model for deployment

# Save the model in H5 format

model.save('fashion_mnist_ann_model.h5')

Conclusion

In this project, we were able to construct an Artificial Neural Network (ANN) that can reasonably identify Fashion MNIST photos. Although there was still some confusion between identical clothing items, the model performed well. In fact, Convolutional Neural Networks may be employed in the future for better recognition of images. On the whole, this work shows the effectiveness of deep learning for problems associated with image classification.